Impact Intelligence is the title of my latest book. It explains how

to improve awareness of the business impact of new initiatives. The

Classic Enterprise thinks of the expenditure on these initiatives as

discretionary spend. A software business might account for it

as R&D expenditure. Written with a framing of investment

governance, the book is aimed at the execs who approve investments.

They are the ones with the authority to introduce change. They also have

the greatest incentive to do so because they are answerable to

investors. But they are not the only ones. Tech CXOs have an incentive

to push for impact intelligence too.

Consider this. You are a CTO or other tech CXO such as a CIO or CDO

(Digital/Data). Your teams take on work prioritized by a Product organization or

by a team of business relationship managers (BRM). More than ever, you are being asked to report and

improve productivity of your teams. Sometimes, this is part of a budget

conversation. A COO or CFO might ask you, “Is increasing the budget the

only option? What are we doing to improve developer productivity?” More

recently, it has become part of the AI conversation. As in, “Are we using

AI to improve developer productivity?”. Or even, “How can we

leverage AI to lower the cost per story point?” That’s self-defeating

unit economics in overdrive! As in, it aims to optimize a metric

that has little to do with business impact. This could, and usually does, backfire.

While it is okay to ensure that everyone

pulls their weight, the current developer productivity mania feels a bit

much. And it misses the point. This has been stressed time

and again.

You might already know this. You know that developer productivity is in

the realm of output. It matters less than outcome and

impact. It’s of no use if AI improves productivity without making a

difference to business outcomes. And that’s a real risk for many companies

where the correlation between output and outcome is weak.

The question is, how do you convince your COO or CFO to fixate less on

productivity and more on overall business impact?

Even if there is no productivity pressure, a tech CXO could still use the guidance here

to improve the awareness of business impact of various efforts. Or if you are a product CXO, that’s even better.

It would be easier to implement the recommendations here if you are on board.

Impact Trumps Productivity

In factory production, productivity is measured as units produced per

hour. In construction, it might be measured as the cost per square foot.

In these domains, worker output is tangible, repeatable, and performance

is easy to benchmark. Knowledge work, on the other hand, deals in

ambiguity, creativity, and non-routine problem-solving. Productivity of

knowledge work is harder to quantify and often decoupled from direct

business outcomes. More hours or output (e.g., lines of code, sprint

velocity, documents written, meetings attended) do not necessarily lead

to greater business value. That’s unless you are a service provider and your

revenue is purely in terms of billable hours. As a technology leader,

you must highlight this. Otherwise, you could get trapped in a vicious

cycle. It goes like this.

As part of supporting the business, you continue to deliver new

digital products and capabilities. However, the commercial (business)

impact of all this delivery is often unclear. This is because

impact-feedback loops are absent. Faced with unclear impact, more ideas

are executed to move the needle somehow. Spray and pray! A

feature factory takes shape. The tech estate balloons.

Figure 1: Consequences of Unclear Business Impact

All that new stuff must be kept running. Maintenance (Run, KTLO)

costs mount. It limits the share of the budget available for new

development (Change, R&D, Innovation). When you ask your COO or CFO

for an increase in budget, they ask you to improve developer

productivity instead. Or they ask you to justify your demand in terms of

business impact. You struggle to provide this justification because of a

general deficit of impact intelligence within the organization.

If you’d like to stop getting badgered about developer productivity,

you must find a way to steer the conversation in a more constructive

direction. Reorient yourself. Pay more attention to the business impact

of your teams’ efforts. Help grow impact intelligence. Here’s an

introduction.

Impact Intelligence

Impact Intelligence is the constant awareness of the

business impact of initiatives: tech initiatives, R&D initiatives,

transformation initiatives, or business initiatives. It entails tracking

contribution to key business metrics, not just to low-level

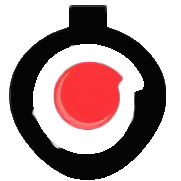

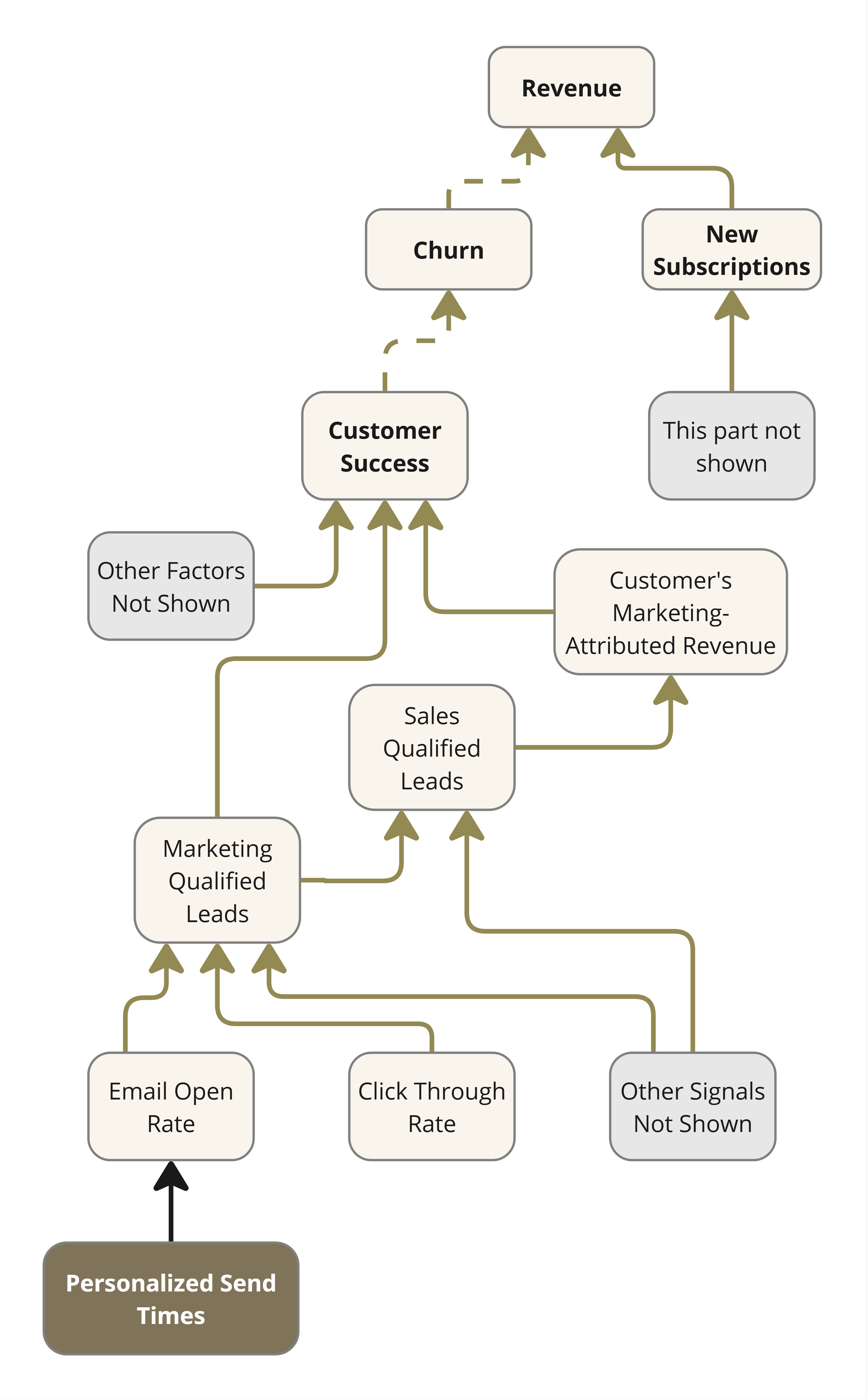

metrics in proximity to an initiative. Figure 2 illustrates this with

the use of a visual that I call an impact network.

It brings out the

inter-linkages between factors that contribute to business impact,

directly or indirectly. It is a bit like a KPI tree, but it can

sometimes be more of a network than a tree. In addition, it follows some

conventions to make it more useful. Green, red, blue, and black arrows

depict desirable effects, undesirable effects, rollup relationships, and

the expected impact of functionality, respectively. Solid and dashed

arrows depict direct and inverse relationships. Except for the rollups (in blue), the links

don’t always represent deterministic relationships.

The impact network is a bit like a probabilistic causal model. A few more conventions

are laid out in the book.

The bottom row of features, initiatives etc.

is a temporary overlay on the impact network which, as noted earlier, is basically a KPI tree where every node

is a metric or something that can be quantified. I say temporary because the book of work keeps changing

while the KPI tree above remains relatively stable.

Figure 2: An Impact Network with the current Book of Work overlaid.

Typically, the introduction of new features or capabilities moves the

needle on product or service metrics directly. Their impact on

higher-level metrics is indirect and less certain. Direct or first-order

impact, called proximate impact, is easier to notice and claim

credit for. Indirect (higher order), or downstream impact,

occurs further down the line and it may be influenced by multiple

factors. The examples to follow illustrate this.

The rest of this article features smaller, context-specific subsets

of the overall impact network for a business.

Example #1: A Customer Support Chatbot

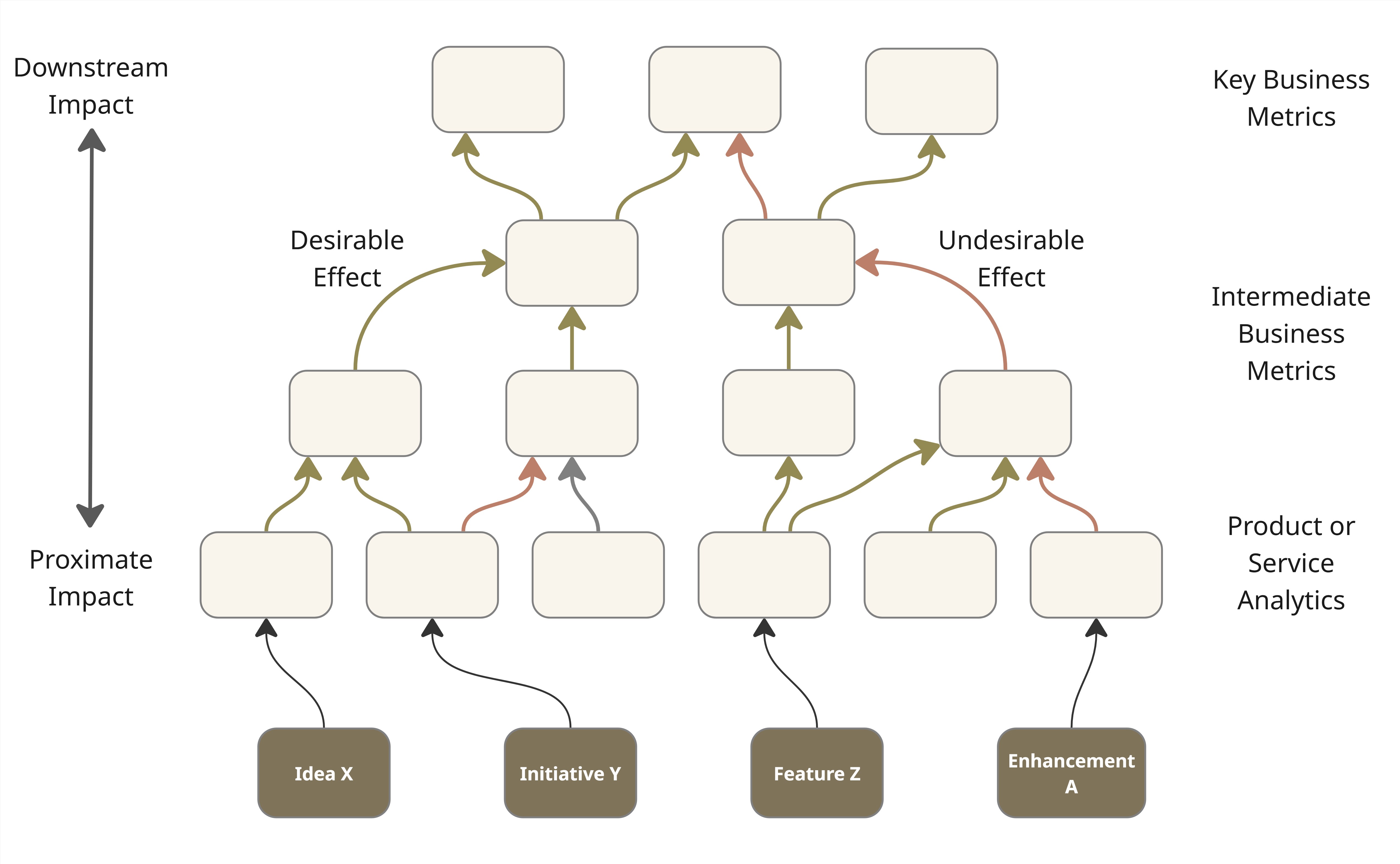

What’s the contribution of an AI customer support chatbot to limiting

call volume (while maintaining customer satisfaction) in your contact

center?

Figure 3: Downstream Impact of an AI Chatbot

It is not enough anymore to assume success based on mere solution

delivery. Or even the number of satisfactory chatbot sessions which

Figure 3 calls virtual assistant capture. That’s proximate

impact. It’s what the Lean Startup mantra of

build-measure-learn aims for typically. However, downstream

impact in the form of call savings is what really matters in this

case. In general, proximate impact might not be a reliable leading

indicator of downstream impact.

A chatbot might be a small initiative in the larger scheme, but small

initiatives are a good place to exercise your impact intelligence

muscle.

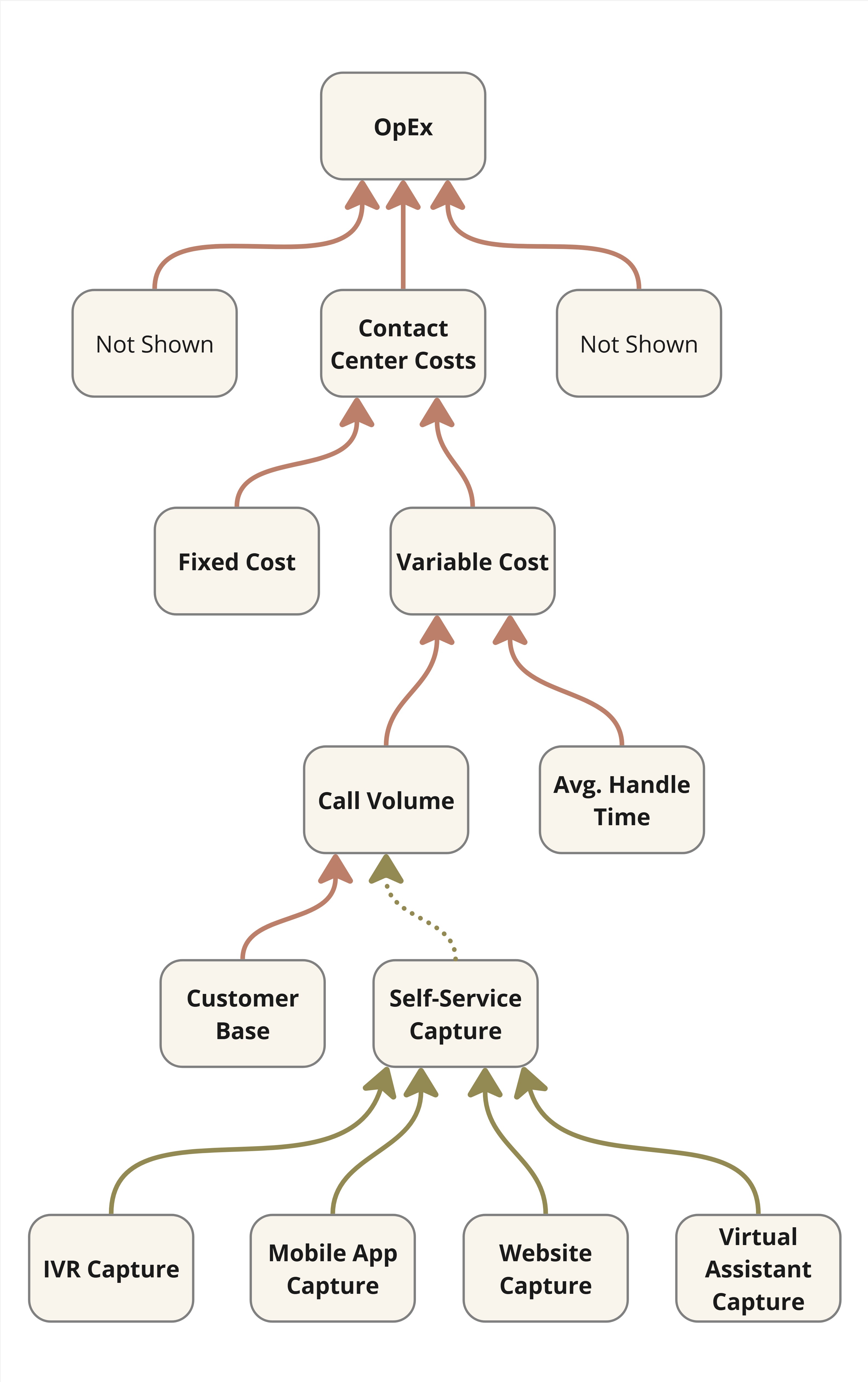

Example #2: Regulatory Compliance AI assistant

Consider a common workflow in regulatory compliance. A compliance

analyst is assigned a case. They study the case, its relevant

regulations and any recent changes to them. They then apply their expertise and

arrive at a recommendation. A final decision is made after subjecting

the recommendation to a number of reviews and approvals depending on the

importance or severity of the case. The Time to Decision might

be of the order of hours, days or even weeks depending on the case and

its industry sector. Slow decisions could adversely affect the business.

If it turns out that the analysts are the bottleneck, then perhaps it

might help to develop an AI assistant (“Regu Nerd”) to interpret and

apply the ever-changing regulations. Figure 4 shows the impact network

for the initiative.

Figure 4: Impact Network for an AI Interpreter of Regulations

Its proximate impact may be reported in terms of the uptake of the

assistant (e.g., prompts per analyst per week), but it is more

meaningful to assess the time saved by analysts while processing a case.

Any real business impact would arise from an improvement in Time to

Decision. That’s downstream impact, and it would only come about if

the assistant were effective and if the Time to initial

recommendation were indeed the bottleneck in the first place.

Example #3: Email Marketing SaaS

Consider a SaaS business that offers an email marketing solution.

Their revenue depends on new subscriptions and renewals. Renewal depends

on how useful the solution is to their customers, among other factors

like price competitiveness. Figure 5 shows the

relevant section of their impact network.

Figure 5: Impact Network for an Email Marketing SaaS

The clearest sign of customer success is how much additional revenue

a customer could make through the leads generated via the use of this

solution. Therefore, the product team keeps adding functionality to

improve engagement with emails. For instance, they might decide to

personalize the timing of email dispatch as per the recipient’s

historical behavior. The implementation uses

behavioral heuristics from open/click logs to identify peak engagement

windows per contact. This information is fed to their campaign

scheduler. What do you think is the measure of success of this feature?

If you limit it to Email Open Rate or Click Through Rate you

could verify with an A/B test. But that would be proximate impact only.

Leverage Points

Drawing up an impact network is a common first step. It serves as a

commonly understood visual, somewhat like the ubiquitous language of

domain driven design.

To improve impact intelligence, leaders must address the flaws in their

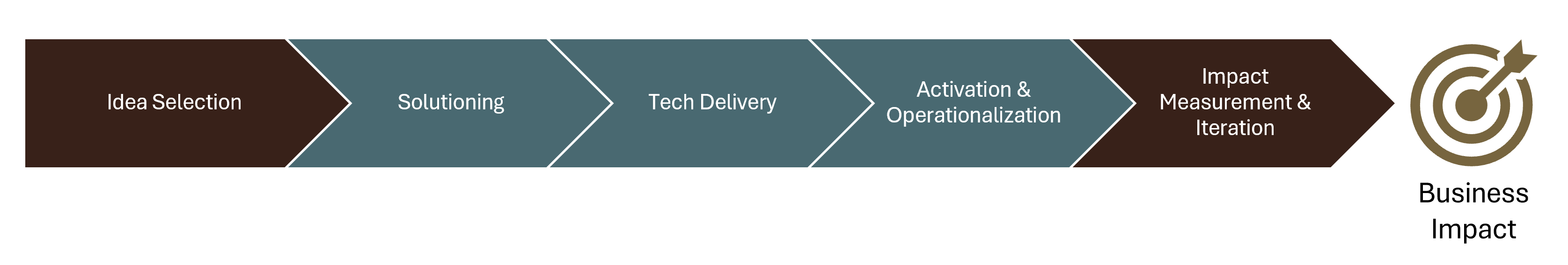

organization’s idea-to-impact cycle ( Figure 6).

Although it is displayed here as a sequence, iteration makes it a

cycle.

Any of the segments of this cycle might be weak but the first (idea

selection) and the last (impact measurement & iteration) are

particularly relevant for impact intelligence. A lack of rigor here

leads to the vicious cycle of spray-and-pray ( Figure 1). The segments in the middle are more in the realm

of execution or delivery. They contribute more to impact than to impact

intelligence.

Figure 6: Leverage Points in the Idea to Impact Cycle

In systems thinking, leverage points are strategic intervention

points within a system where a small shift in one element can produce

significant changes in the overall system behavior. Figure 6 highlights the two leverage points for impact

intelligence: idea selection and impact measurement. However, these two

segments typically fall under the remit of business leaders, business

relationship managers, or CPOs (Product). On the other hand, you—a tech

CXO—are the one under productivity pressure resulting from poor

business impact. How might you introduce rigor here?

In theory, you could try talking to the leaders responsible for idea

selection and impact measurement. But if they were willing and able,

they’d have likely spotted and addressed the problem themselves. The

typical Classic Enterprise is not free of politics. Having this

conversation in such a place might only result in polite reassurances

and nudges not to worry about it as a tech CXO.

This situation is common in places that have grown Product and

Engineering as separate functions with their own CXOs or senior vice

presidents. Smaller or younger companies have the opportunity to avoid

growing into this dysfunction. But you might be in a company that is

well past this orgdesign decision.